[Paper Summary] Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

Google's paper tells us that LLMs might be able to demonstrate reasoning if prompted correctly.

What is Chain of Thought prompting?

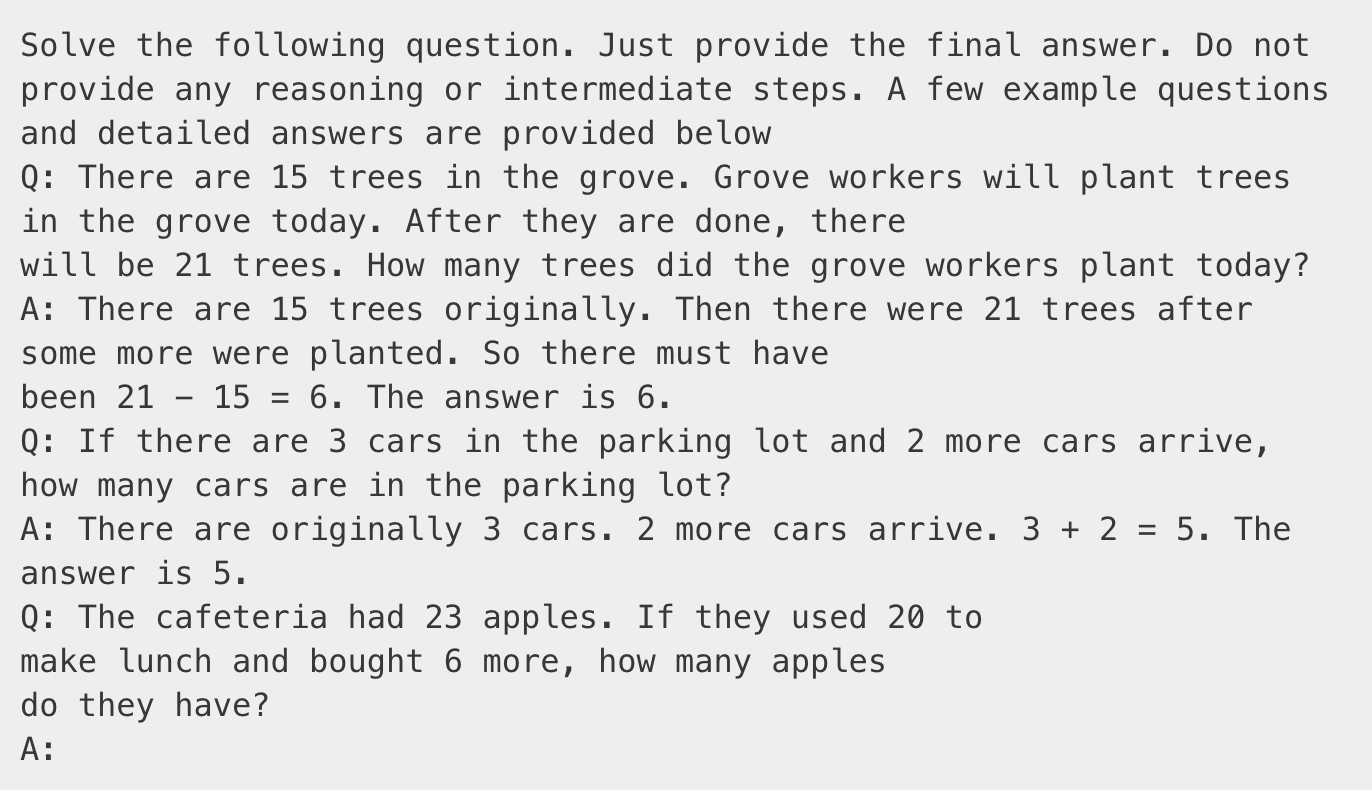

LLMs answer better when they generate reasoning steps before generating the final answer. These reasoning steps are called Chain of Thought (CoT). They represent the steps needed to solve the problem. Adding examples to the prompt where the CoT is demonstrated before arriving at the final answer nudges LLMs to answer a question similarly. Consequently, this discourages the LLM from directly spitting out the final answer, which would probably be incorrect. For example,

On the left, the prompt has an example question-answer pair, along with the question the LLM is supposed to solve. Particularly, the answer in the example is just the final answer without any explanation. However, this is not the case for the prompt on the right. The reasoning steps or CoT is provided before arriving at the final answer. The paper claims that adding such detailed examples in the prompt significantly boosts LLM’s capability to solve complex questions.

As an example, he is a CoT-styled prompt to solve math word problems,

Each example in the above prompt provides step-by-step reasoning before arriving at the final answer. If one supplies their question using this prompt, the LLM will most likely answer similarly. It will try to reason, generate intermediate steps, and then provide the final answer.

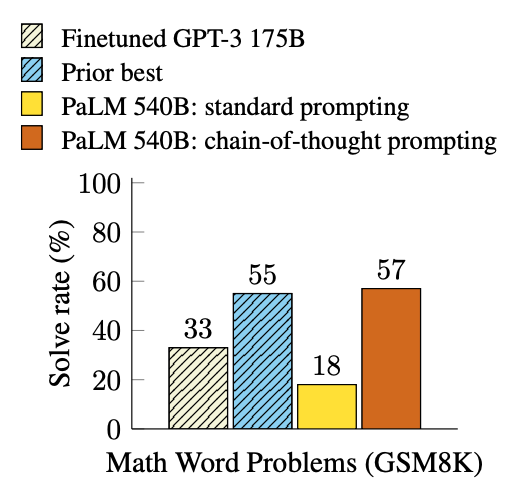

CoT prompting is simple to use. It does not require separate fine-tuning or any model training. All we need are well-reasoned examples to insert in the prompt. The coT approach is advantageous when the LLM is asked to solve a complex problem with multiple steps. Such problems can be broken down into sub-problems. Solving them leads to the final answer. Using CoT examples in the prompt, the authors could produce impressive results. For instance, on GSM8K, which is a dataset of grade school math problems, they reported the following results

Ablation Studies

The authors also performed several ablation studies to establish the usefulness of inserting CoT examples in the prompt. Particularly, they tried answering the following questions —

What if the gains come from the model generating mathematical equations before giving the final answer? Meaning, if we remove the CoT examples from the prompt but ask the model to generate equations or reasoning steps before the final answer, do we get similar results? If yes, then inserting CoT examples don’t have significant effect. I imagine the prompt to be like

What if CoT merely allows the model to spend more time thinking, and the actual intermediate steps are not helpful by themselves? From my understanding, the model could generate tokens to ‘buy time’ to think. Here, thinking could mean processing the prompt repeatedly with every token generated, which could be interpreted as ‘solving’ the question. To verify this, the authors asked the model to produce a sequence of dots (…) equal to the number of characters needed to solve the problem. I imagine the prompt to be like

What if the CoT examples are enough and the model need not generate the reasoning steps? The hypothesis is that the CoT examples in the prompt allow the model to access relevant knowledge. After that, the model does not need to generate reasoning tokens; hence, it can directly answer the question. The authors ask the model to generate the reasoning tokens after answering the questions instead of before. I imagine the prompt to be like

In all the ablation studies, the authors found that the prompt's examples and generating CoT are essential.

CoT’s relation with model size

Another practical tip for CoT prompting is that it works only for large enough models. Authors found substantial gains in CoT prompting for models >100B parameter count. For smaller models, CoT produced seemingly logical but incorrect reasoning. Moreover, CoT hurts performance if used with <10B parameter models. This could be attributed to smaller models’ inherently poor semantic and logical capabilities.