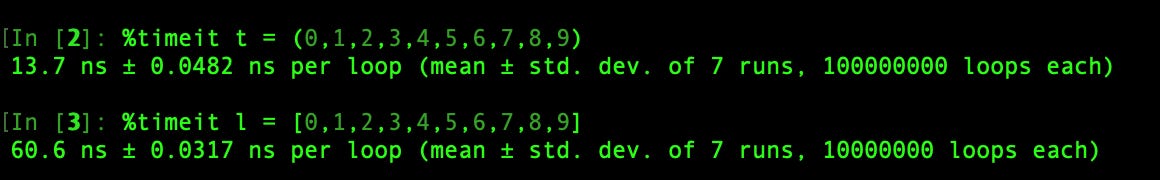

[Notes] Python Lists vs Tuples from a performance perspective

Finer details about everyday Python data sctructures to make your code more efficient.

So far, I knew that Python lists are mutable and tuples aren’t, but that factoid rarely helped me. I remember being asked when to use tuples over lists in an interview, and I had no answer. Much later, I stumbled upon a section in the book High-Performance Python that gave me exactly what I wished to understand.

Lists are more flexible than tuples. Lists can be expanded using operations like .append() or modified via assignment some_list[i] = some_element.

So why would anyone use tuples at all?

There is a cost to the flexibility, which is understood by inspecting the inner workings of the append operation. Consider an array of size N that we wish to append an element, resulting in an array of size N+1. Python should request the OS to allocate extra memory for the new element. However, there’s more. It over-allocates space to accommodate more than one item. The idea is that an append operation may be the start of many more appends (in a for loop, for example). Hence, it is cheaper for Python to simultaneously make space for many elements. The next few appends become cheaper. The same allocation style repeats when the array utilizes the current chunk of over-allocation.

In Python3, the over-allocation pattern follows the formula

allocated = size + (size >> 3) + (3 if size < 9 else 6) # size > 0>> is called the right shift operator. For example, 63 >> 2 shifts the binary representation of 63, 111111, by two bits to the right, resulting in 001111, or 15 in base 10. You can execute this operation in an IPython shell and check.

If size = 1, the allocated memory is

1 + (1 >> 3) + (3 if 1 < 9 else 6) = 1 + 0 + 3 = 4This means that if we append to an array with the resulting size of 1, Python creates space for three more appends. Only when the append results in size 5 is this over-allocation repeated -

5 + (5 >> 3) + (3 if 5 < 9 else 6) = 5 + 0 + 3 = 8Creating space for up to 8 elements. Note that this over-allocation only happens during an append. If a list is created directly, no extra allocation occurs.

But why over-allocate at all?

For an array of size 991, the over-allocation is 129, which is not insignificant. In addition, if you have a nested array with many small lists, the extra memory can add up. Such a situation is not uncommon in wrangling large datasets.

Consider the process of appending. An element “C” is appended to an initialized list L = [“A”, “B”]. Appending once results in a three-element list. As discussed previously, Python allocates space for up to four elements. It creates the new array and copies the initial list and the appended element.

Copying is an expensive operation occurring in O(n) time. Without over-allocation, this process would occur for every append, making it incredibly slow.

How are tuples better?

The immutably of tuples has some advantages.

Tuples are cached by the Python runtime, which means that we don’t need to talk to the kernel to reserve memory every time we want to use one.

- High Performance Python, Page 66

Generally, Python clears the memory occupied by any unused variable (this process is called garbage collection). However, as stated in the book, for tuples of size less than 20, Python will keep that block to itself for any tuple of the same size created in the future. This reduces the overhead of Python runtime having to communicate with the OS.

However, I observed similar behavior when I tried larger tuples with larger integers (FYI, I am running it on Python 3.9.7). So, I am unsure what the precise rules for tuple caching are.